case study:

Making a music video when you can’t get the band back together

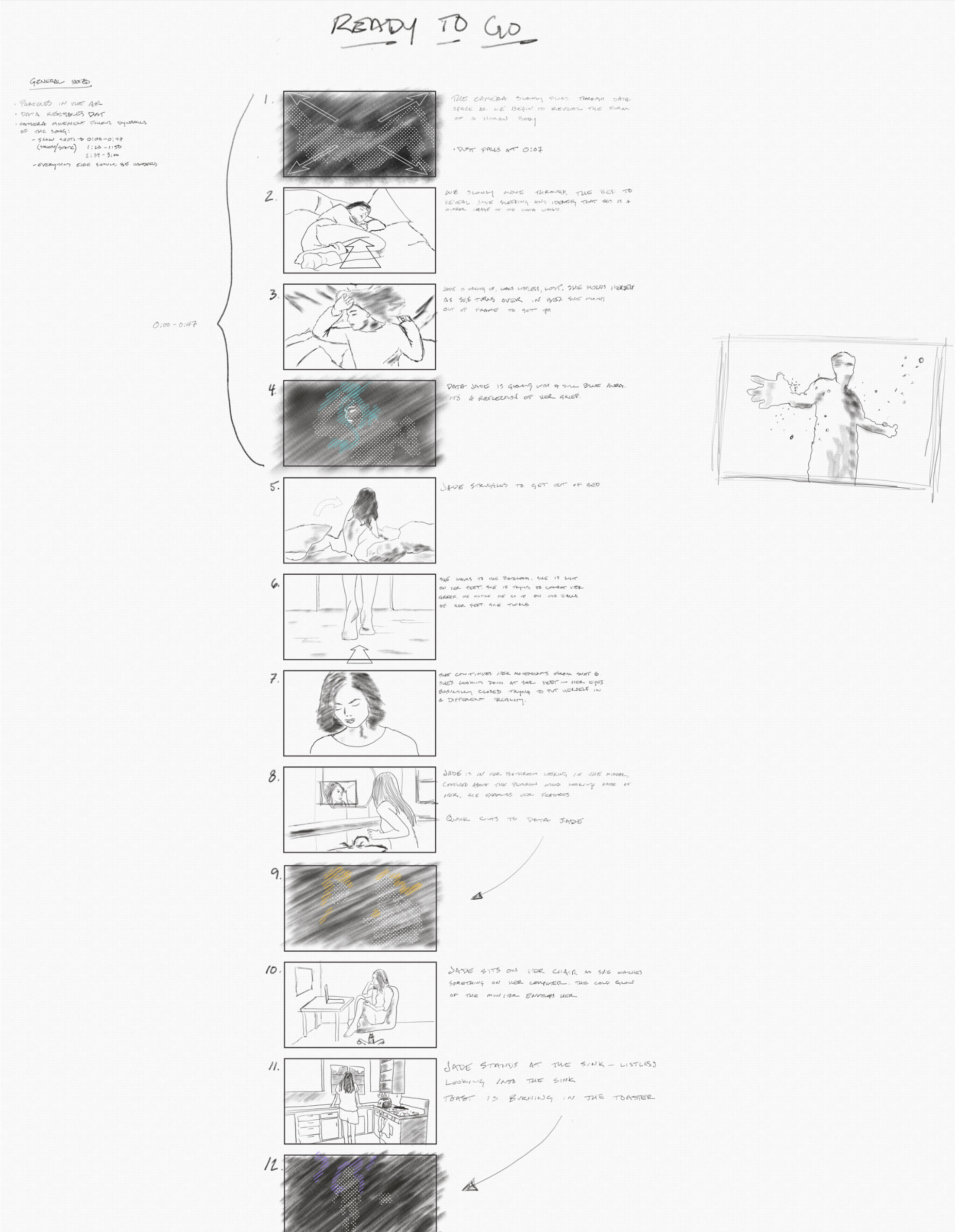

In January 2020 B.C., Ben Epand, the guitarist for the LA-based indie rock band KidEyes, first contacted Super A-OK to conceive a video treatment for their upcoming single Ready to Go. The concept we created involved a live action shoot in which we simultaneously captured film and depth data via a Microsoft Kinect. We wanted to tell a story of personal growth by presenting one character’s life on film and visualizing their deeper emotional expression in a 3D world.

(original storyboard):

As our March shoot date approached and the specter of a global pandemic loomed, it became apparent that we would not be shooting anything in person any time soon.

We took a few days to regroup and then started to hash out ways to approach the video with the new restrictions imposed by this weird new world. Still drawn to the idea of conveying the concept of personal growth through light and texture, we began to investigate ways to accomplish that with everyone remaining in isolation.

I am a big fan of doing effects in camera as much as possible, but this situation simply would not allow it. We made it our mission to achieve that authentic in-camera look, avoiding the slickness typically associated with 3d effects. Most of our visual inspiration came from the pre-digital world of 70’s and 80’s TV title sequences.

visual inspiration

After doing a few tests of our own, we were confident that with the correct lighting we could manipulate iphone footage collected from the far-flung band members to get what we wanted.

proof of concepts utilizing iphone footage shot under quarantine

3D animators are like “Trapcode, that’s cool I guess.”

Once we were sure this was going to work, each band member was instructed to set up their iphone to record a static shot from about 6’ away (social distancing even then), using a single light source to help create a dramatic range of light from shadows to highlights. In retrospect a little more light in the shadows would have helped with the final footage, but nothing that couldn’t be corrected in post production.

We collected all the iphone footage and converted it to grayscale, carefully matching the contrast ratios. This allowed consistent numbers to go off of when converting the luminance values to depth and light values. I decided to map the highest exposure points to the particles furthest from their origin. The next step was taking those same points and mapping them to the brightest glowing particles.

conversion of iphone footage to treated footage

With the corrected iphone footage we assembled a rough cut so as not to make our 3D pipeline too unwieldy. Once that was complete we added the chromatic flares. Our goal was to evolve the effect over the course of the song to show the growth that the character goes through.

All in all it was a great challenge in creating something exciting at a time when we’re all stuck at home. Restrictions are often the best way of arriving at something exciting.